Property-based nearest neighbors search

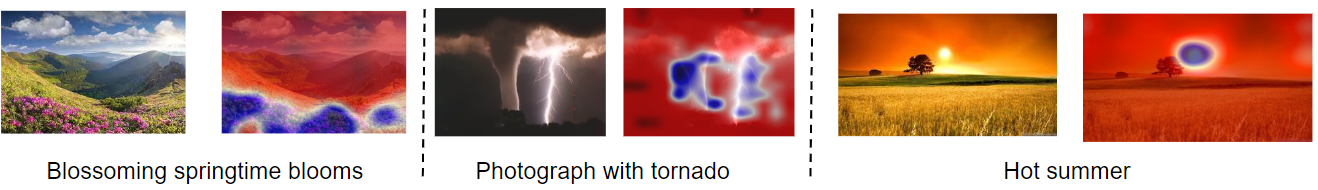

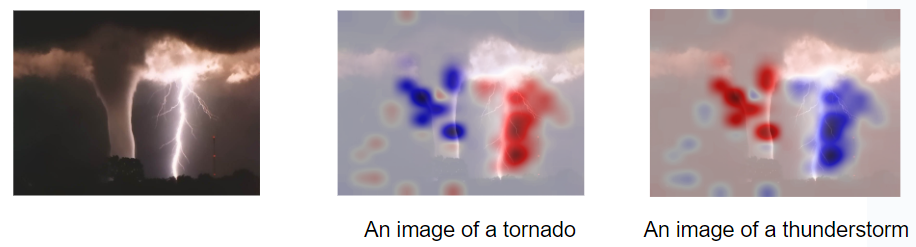

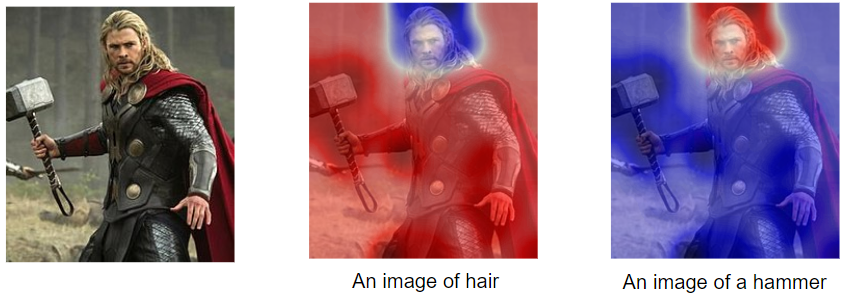

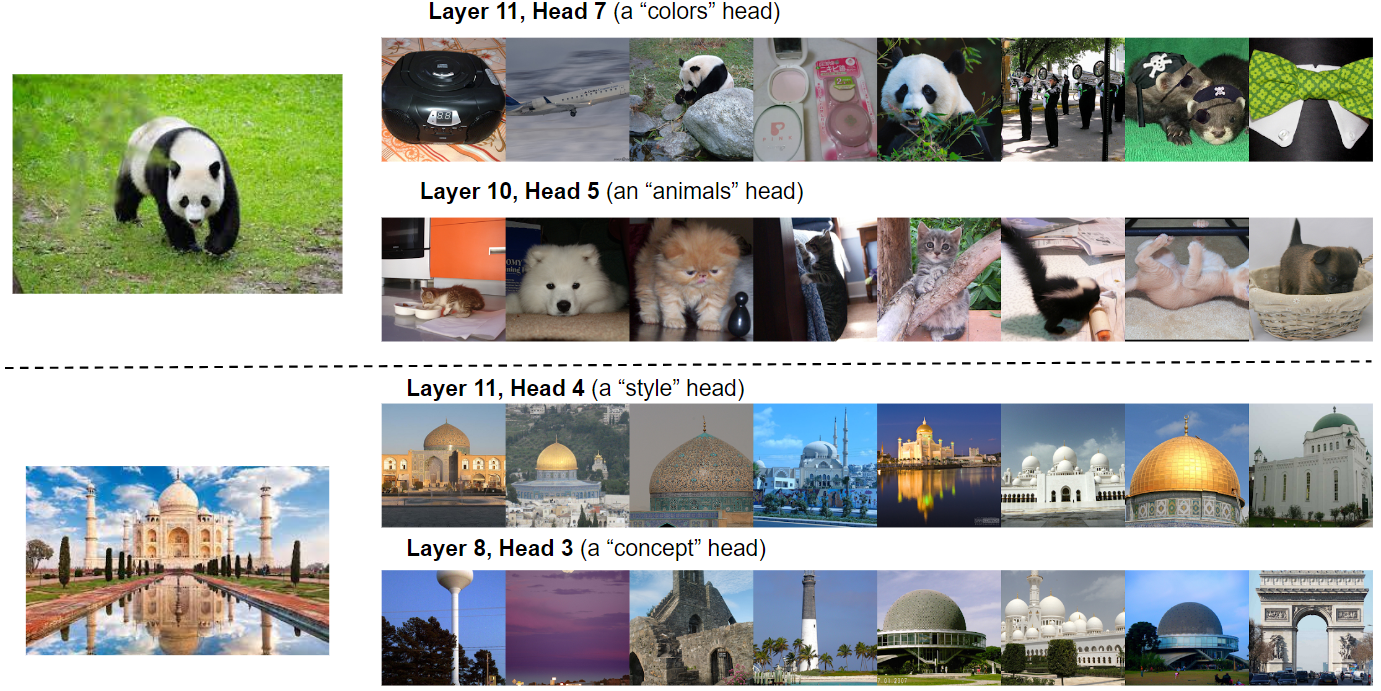

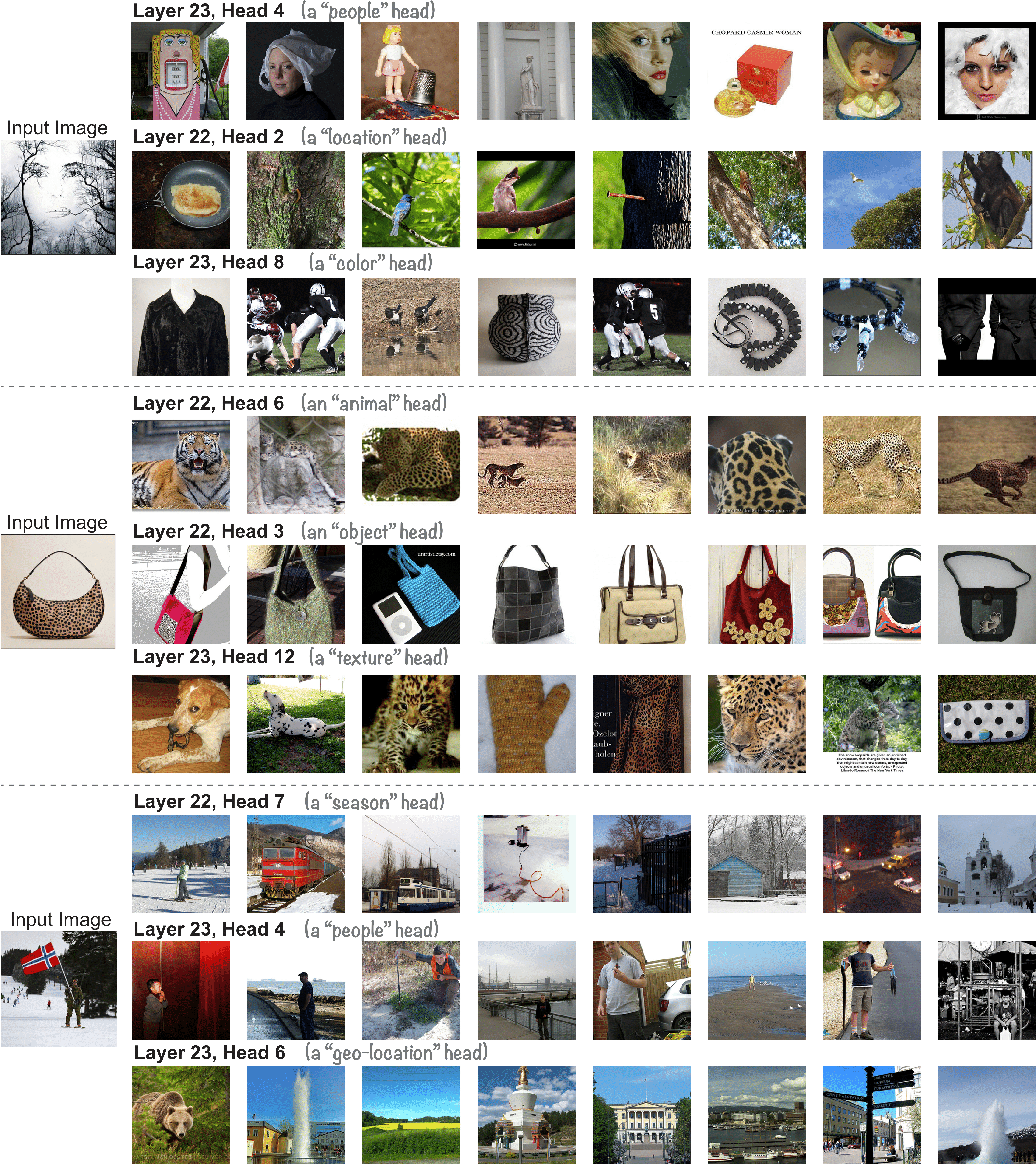

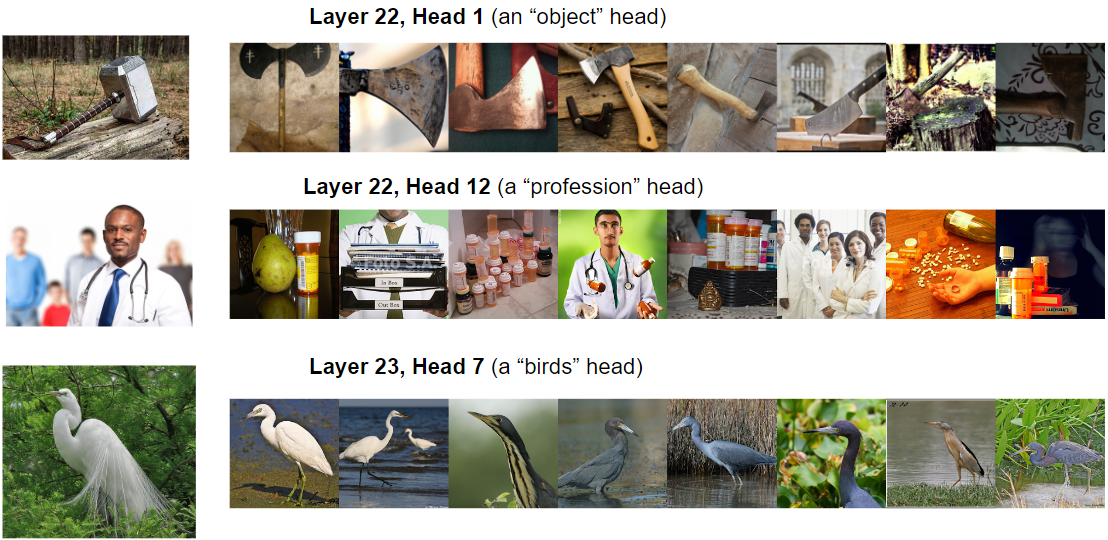

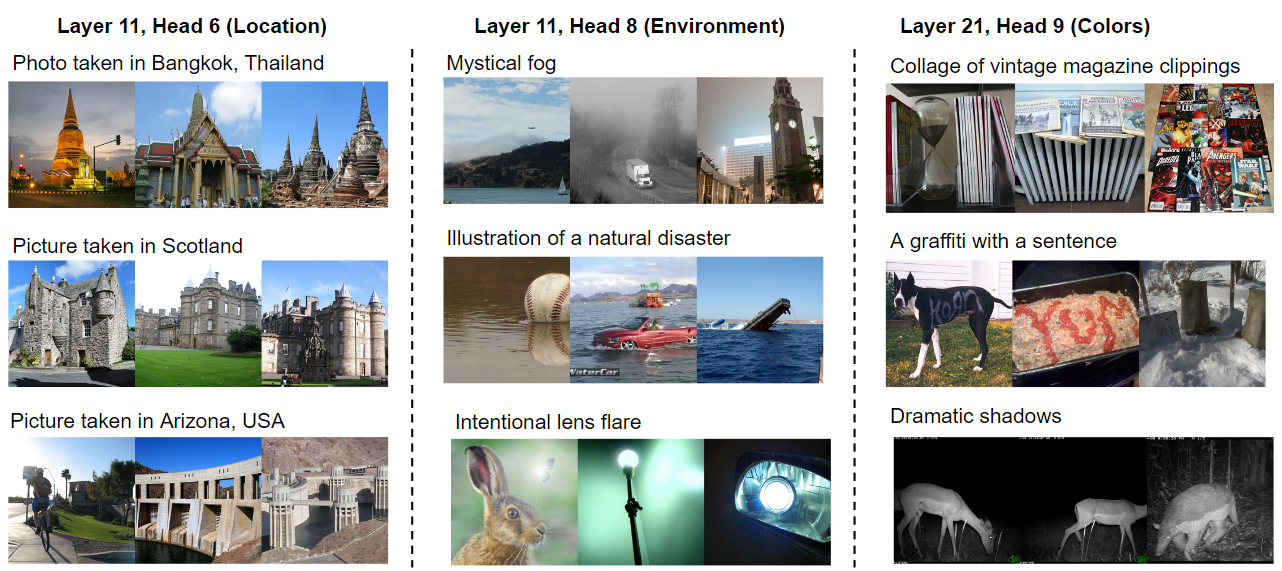

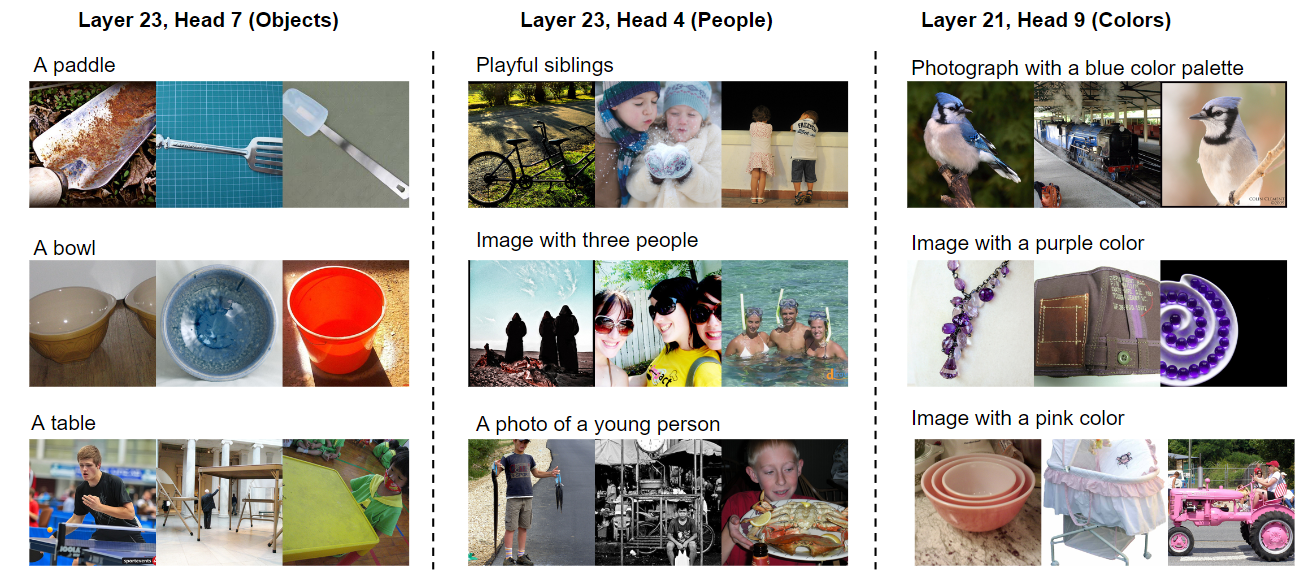

In this analysis, we show that CLIP layers and heads can be characterized by specific properties such as colors, locations, animals, etc. We retrieve the top-4 most similar images from ImageNet validation dataset for a selected property. The properties have been labelled with ChatGPT using in-context learning. We provide text-span ouputs and manually labelled properties as in-context examples and ask ChatGPT to identify the properties for all the other layers & heads for each of the models. The properties that are common across layers and heads for each model for combined and presented. Below, we show the examples for each of the properties. Here, the blue color corresponds to the description provided in the text input.

Top-4 nearest neighbors for "location" property. The model used is ViT-B-32 (OpenAI).

The input image is a picture of an "Eiffle tower" in Paris, France. The top-4 images are related to the popular location

landmarks.

Top-4 nearest neighbors for "location" property. The model used is ViT-L-14 (Liaon).

Top-4 nearest neighbors for "animals" property. The model used is ViT-B-16 (OpenAI).

Top-4 nearest neighbors for "colors" property. The model used is ViT-B-32 (Data comp). In this example, we see that both the input and retrieved images have common orange, black, and green colors.

Top-4 nearest neighbors for "pattern" property. The model used is ViT-B-32 (OpenAI). In this example, the input image shows an animal laying on the grass. The top retrieved images also show this common pattern of an animal laying on the grass.