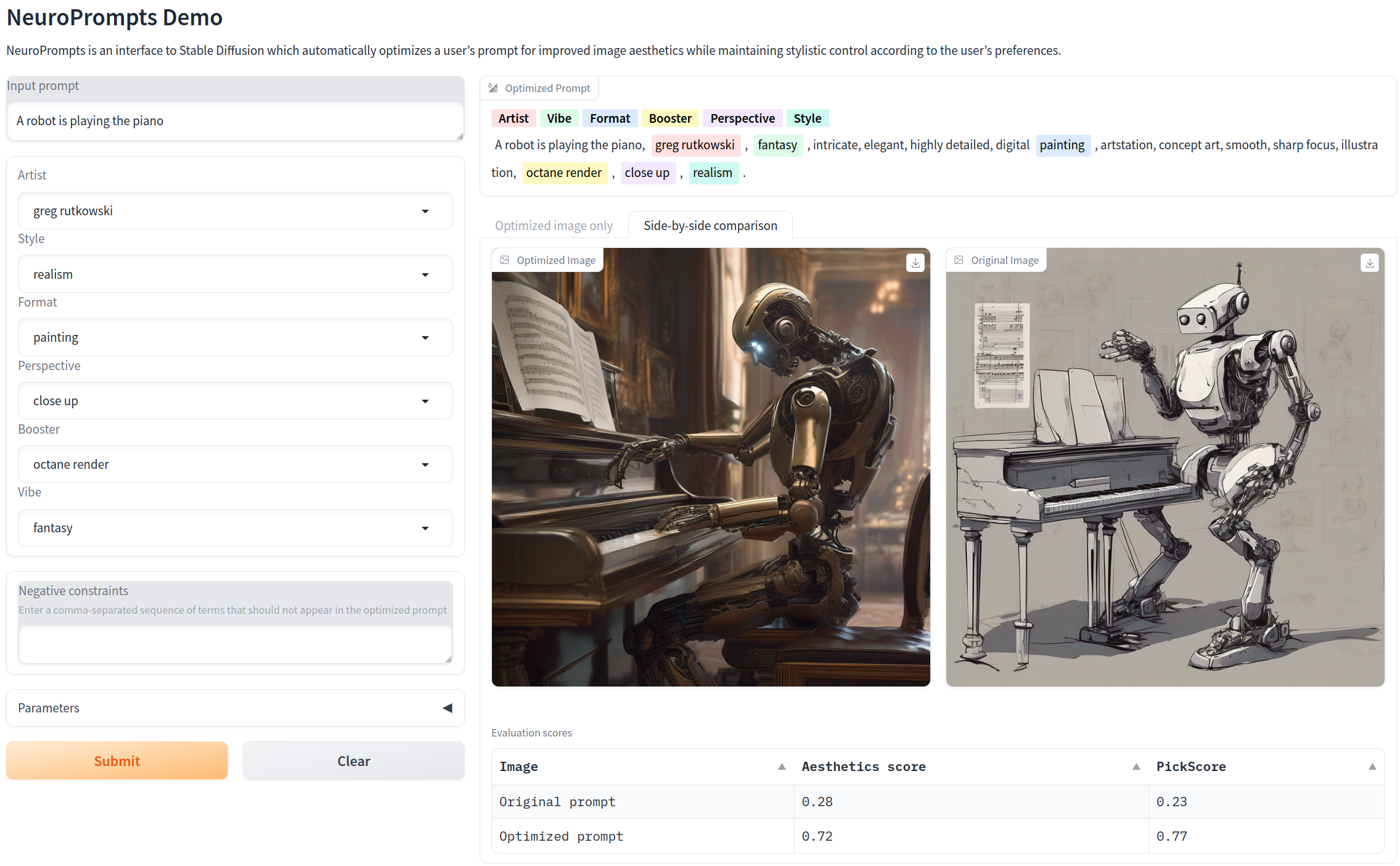

The interface of NeuroPrompts in side-by-side comparison mode

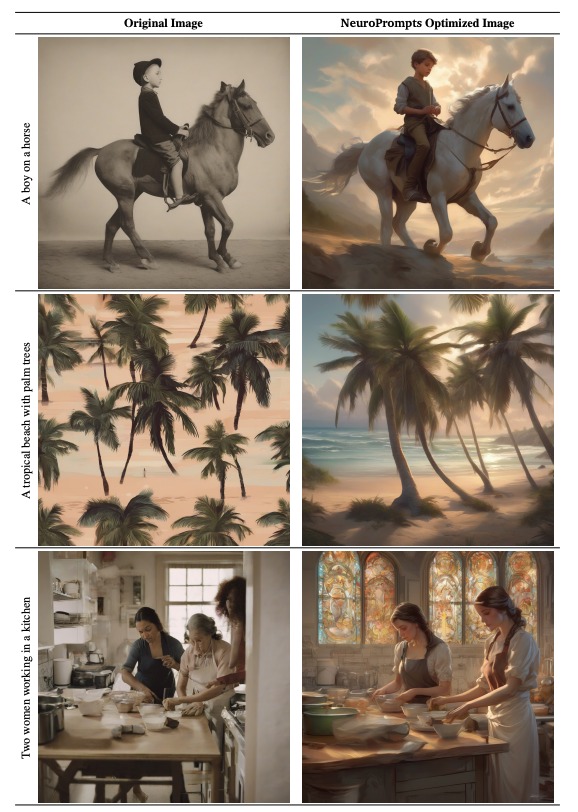

Despite impressive recent advances in text-to-image diffusion models, obtaining high-quality images often requires prompt engineering by humans who have developed expertise in using them. In this work, we present NeuroPrompts, an adaptive framework that automatically enhances a user’s prompt to improve the quality of generations produced by text-to-image mod- els. Our framework utilizes constrained text de- coding with a pre-trained language model that has been adapted to generate prompts similar to those produced by human prompt engineers, which enables higher-quality text-to-image generations from resulting prompts and provides user control over stylistic features via constraint set specification. We demonstrate the utility of our framework by creating an interactive application for prompt enhancement and image generation using Stable Diffusion. Additionally, we conduct experiments utilizing a large dataset of human-engineered prompts for text-to-image generation and show that our approach automatically produces enhanced prompts that result in superior image quality.

@inproceedings{howard-etal-2022-neurocounterfactuals,

title = "{N}euro{C}ounterfactuals: Beyond Minimal-Edit Counterfactuals for Richer Data Augmentation",

author = "Howard, Phillip and

Singer, Gadi and

Lal, Vasudev and

Choi, Yejin and

Swayamdipta, Swabha",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2022",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-emnlp.371",

doi = "10.18653/v1/2022.findings-emnlp.371",

pages = "5056--5072",

abstract = "While counterfactual data augmentation offers a promising step towards robust generalization in natural language processing, producing a set of counterfactuals that offer valuable inductive bias for models remains a challenge. Most existing approaches for producing counterfactuals, manual or automated, rely on small perturbations via minimal edits, resulting in simplistic changes. We introduce NeuroCounterfactuals, designed as loose counterfactuals, allowing for larger edits which result in naturalistic generations containing linguistic diversity, while still bearing similarity to the original document. Our novel generative approach bridges the benefits of constrained decoding, with those of language model adaptation for sentiment steering. Training data augmentation with our generations results in both in-domain and out-of-domain improvements for sentiment classification, outperforming even manually curated counterfactuals, under select settings. We further present detailed analyses to show the advantages of NeuroCounterfactuals over approaches involving simple, minimal edits.",

}