Output Filters¶

The output filters only process the actions.

Action Filters¶

-

class

rl_coach.filters.action.AttentionDiscretization(num_bins_per_dimension: Union[int, List[int]], force_int_bins=False)[source]¶ Discretizes an AttentionActionSpace. The attention action space defines the actions as choosing sub-boxes in a given box. For example, consider an image of size 100x100, where the action is choosing a crop window of size 20x20 to attend to in the image. AttentionDiscretization allows discretizing the possible crop windows to choose into a finite number of options, and map a discrete action space into those crop windows.

Warning! this will currently only work for attention spaces with 2 dimensions.

- Parameters

num_bins_per_dimension – Number of discrete bins to use for each dimension of the action space

force_int_bins – If set to True, all the bins will represent integer coordinates in space.

-

class

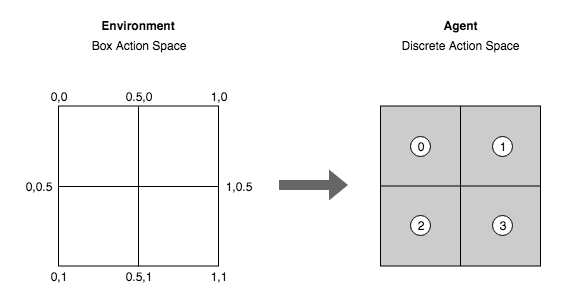

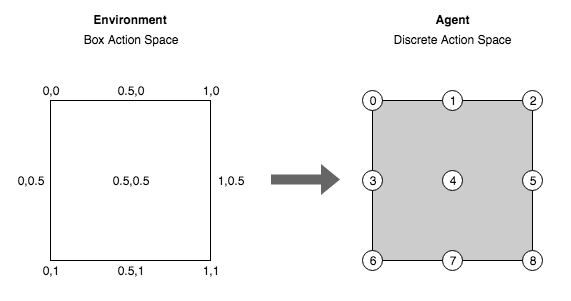

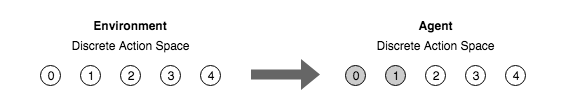

rl_coach.filters.action.BoxDiscretization(num_bins_per_dimension: Union[int, List[int]], force_int_bins=False)[source]¶ Discretizes a continuous action space into a discrete action space, allowing the usage of agents such as DQN for continuous environments such as MuJoCo. Given the number of bins to discretize into, the original continuous action space is uniformly separated into the given number of bins, each mapped to a discrete action index. Each discrete action is mapped to a single N dimensional action in the BoxActionSpace action space. For example, if the original actions space is between -1 and 1 and 5 bins were selected, the new action space will consist of 5 actions mapped to -1, -0.5, 0, 0.5 and 1.

- Parameters

num_bins_per_dimension – The number of bins to use for each dimension of the target action space. The bins will be spread out uniformly over this space

force_int_bins – force the bins to represent only integer actions. for example, if the action space is in the range 0-10 and there are 5 bins, then the bins will be placed at 0, 2, 5, 7, 10, instead of 0, 2.5, 5, 7.5, 10.

-

class

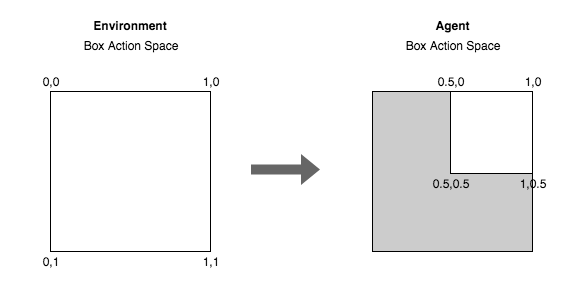

rl_coach.filters.action.BoxMasking(masked_target_space_low: Union[None, int, float, numpy.ndarray], masked_target_space_high: Union[None, int, float, numpy.ndarray])[source]¶ Masks part of the action space to enforce the agent to work in a defined space. For example, if the original action space is between -1 and 1, then this filter can be used in order to constrain the agent actions to the range 0 and 1 instead. This essentially masks the range -1 and 0 from the agent. The resulting action space will be shifted and will always start from 0 and have the size of the unmasked area.

- Parameters

masked_target_space_low – the lowest values that can be chosen in the target action space

masked_target_space_high – the highest values that can be chosen in the target action space

-

class

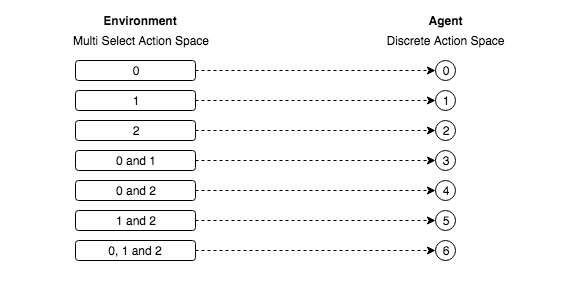

rl_coach.filters.action.PartialDiscreteActionSpaceMap(target_actions: List[Union[int, float, numpy.ndarray, List]] = None, descriptions: List[str] = None)[source]¶ Partial map of two countable action spaces. For example, consider an environment with a MultiSelect action space (select multiple actions at the same time, such as jump and go right), with 8 actual MultiSelect actions. If we want the agent to be able to select only 5 of those actions by their index (0-4), we can map a discrete action space with 5 actions into the 5 selected MultiSelect actions. This will both allow the agent to use regular discrete actions, and mask 3 of the actions from the agent.

- Parameters

target_actions – A partial list of actions from the target space to map to.

descriptions – a list of descriptions of each of the actions

-

class

rl_coach.filters.action.FullDiscreteActionSpaceMap[source]¶ Full map of two countable action spaces. This works in a similar way to the PartialDiscreteActionSpaceMap, but maps the entire source action space into the entire target action space, without masking any actions. For example, if there are 10 multiselect actions in the output space, the actions 0-9 will be mapped to those multiselect actions.

-

class

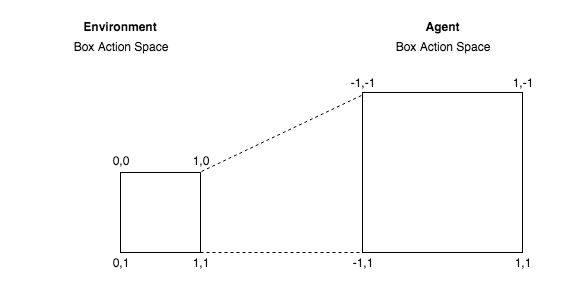

rl_coach.filters.action.LinearBoxToBoxMap(input_space_low: Union[None, int, float, numpy.ndarray], input_space_high: Union[None, int, float, numpy.ndarray])[source]¶ A linear mapping of two box action spaces. For example, if the action space of the environment consists of continuous actions between 0 and 1, and we want the agent to choose actions between -1 and 1, the LinearBoxToBoxMap can be used to map the range -1 and 1 to the range 0 and 1 in a linear way. This means that the action -1 will be mapped to 0, the action 1 will be mapped to 1, and the rest of the actions will be linearly mapped between those values.

- Parameters

input_space_low – the low values of the desired action space

input_space_high – the high values of the desired action space