Persistent Advantage Learning¶

Actions space: Discrete

References: Increasing the Action Gap: New Operators for Reinforcement Learning

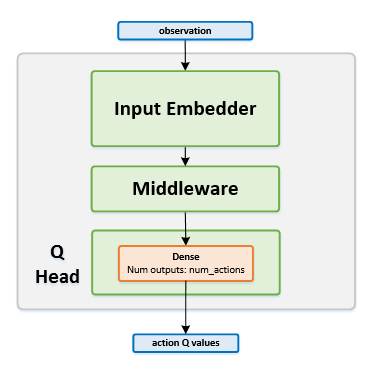

Network Structure¶

Algorithm Description¶

Training the network¶

Sample a batch of transitions from the replay buffer.

Start by calculating the initial target values in the same manner as they are calculated in DDQN \(y_t^{DDQN}=r(s_t,a_t )+\gamma Q(s_{t+1},argmax_a Q(s_{t+1},a))\)

The action gap \(V(s_t )-Q(s_t,a_t)\) should then be subtracted from each of the calculated targets. To calculate the action gap, run the target network using the current states and get the \(Q\) values for all the actions. Then estimate \(V\) as the maximum predicted \(Q\) value for the current state: \(V(s_t )=max_a Q(s_t,a)\)

For advantage learning (AL), reduce the action gap weighted by a predefined parameter \(\alpha\) from the targets \(y_t^{DDQN}\): \(y_t=y_t^{DDQN}-\alpha \cdot (V(s_t )-Q(s_t,a_t ))\)

For persistent advantage learning (PAL), the target network is also used in order to calculate the action gap for the next state: \(V(s_{t+1} )-Q(s_{t+1},a_{t+1})\) where \(a_{t+1}\) is chosen by running the next states through the online network and choosing the action that has the highest predicted \(Q\) value. Finally, the targets will be defined as - \(y_t=y_t^{DDQN}-\alpha \cdot min(V(s_t )-Q(s_t,a_t ),V(s_{t+1} )-Q(s_{t+1},a_{t+1} ))\)

Train the online network using the current states as inputs, and with the aforementioned targets.

Once in every few thousand steps, copy the weights from the online network to the target network.

-

class

rl_coach.agents.pal_agent.PALAlgorithmParameters[source]¶ - Parameters

pal_alpha – (float) A factor that weights the amount by which the advantage learning update will be taken into account.

persistent_advantage_learning – (bool) If set to True, the persistent mode of advantage learning will be used, which encourages the agent to take the same actions one after the other instead of changing actions.

monte_carlo_mixing_rate – (float) The amount of monte carlo values to mix into the targets of the network. The monte carlo values are just the total discounted returns, and they can help reduce the time it takes for the network to update to the newly seen values, since it is not based on bootstrapping the current network values.